Observations

The Universal Nature of the Challenge

The most striking observation from examining successful AI governance implementations is the remarkable similarity of challenges across all organisational types. Corporate executives, government leaders, nonprofit directors, and academic administrators all face identical fundamental issues:

- Maintaining accountability for autonomous decisions.

- Preserving stakeholder trust.

- Managing personal liability exposure.

- Ensuring mission alignment.

This universality stems from the nature of agentic AI itself. These systems operate according to consistent technical principles regardless of their deployment context. They create the same categories of risk, namely (i) autonomous action beyond intended boundaries, (ii) algorithmic bias affecting stakeholders, (iii) privacy breaches, (iv) system failures disrupting critical services, and (v) transparency failures undermining legitimacy, whether processing insurance claims, welfare applications, donor contributions, or student admissions.

The governance solutions are equally universal. Every successful implementation requires clear accountability structures with designated human oversight, technical boundaries that prevent unauthorised actions, complete audit trails for all decisions, a transparent explanation of decision-making processes, and robust incident response procedures. These requirements transcend organisational form because they address the fundamental challenge of maintaining human control over autonomous systems.

The Governance Imperative

Traditional governance models assume human decision-makers who can explain their reasoning, accept responsibility for outcomes, and modify their approach based on feedback. agentic AI systems challenge every assumption underlying conventional oversight structures. Unlike bounded automations, agentic AI can be designed to make decisions without human approval, adapt its actions independently, and operate at speeds that preclude real-time human intervention.

This creates a profound governance gap that many leaders have failed to recognise. Existing board oversight, management reporting, and operational control systems were designed for human actors, not autonomous systems. Attempting to govern AI with traditional structures is akin to regulating aviation with maritime law. The fundamental assumptions no longer apply.

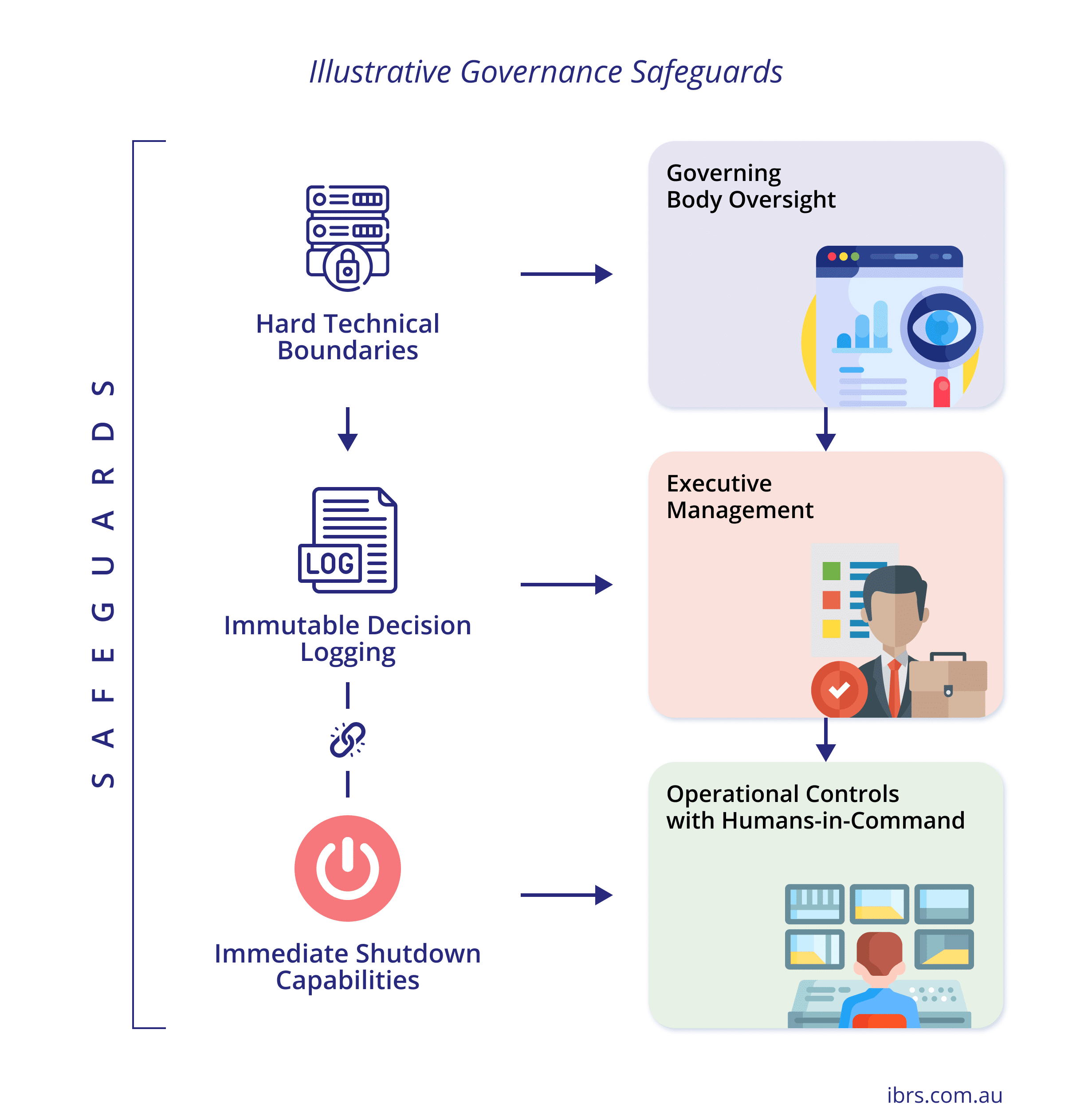

Successful organisations have recognised that AI governance requires new frameworks specifically designed for autonomous systems. These frameworks establish three critical layers:

- Governing Body Oversight: Retains ultimate accountability for AI strategy and risk management.

- Executive Management: Designated senior accountability for AI oversight.

- Operational Controls with Humans-in-Command: Maintain real-time authority over AI systems.

The most sophisticated governance structures treat AI systems as autonomous agents, requiring management approaches similar to those used for human employees, but with additional safeguards that reflect their non-human nature. This includes hard technical boundaries that cannot be overridden, complete and immutable decision logging for audit purposes, and immediate shutdown capabilities for crisis situations.

The Liability Reality

Perhaps the most sobering observation is the extent of personal liability exposure that leaders face when they fail to implement appropriate AI governance. Legal frameworks across all sectors have evolved to hold leaders personally accountable for governance failures, and this accountability explicitly extends to AI systems as well.

In Australia, the stepping stones principle means leaders need not be directly involved in AI failures to face personal liability2. Inadequate governance systems, insufficient oversight mechanisms, or failure to understand AI risks are sufficient grounds for personal accountability. This liability exists regardless of technical sophistication.

With reference to existing precedents related to cyber security and general duties of care, Australian courts and legal experts indicate that a failure to implement proper AI risk management could be seen as a breach of a leader’s duty of reasonable care3.

The Australian Securities and Investments Commission (ASIC) and the Australian Federal Court have signalled that they will enforce obligations for both cyber security and AI governance under existing law, rather than waiting for future AI-specific regulation4. This establishes a clear legal expectation that leaders in all sectors cannot fulfil their fiduciary obligations without implementing proper AI risk management. In essence, leaders must not treat agentic AI as a black box. They are expected to understand, question, and take responsibility for the safe, ethical, and compliant implementation of AI in the workplace, or face potential legal and regulatory consequences. Ignorance is not a defence.

This creates a stark choice: invest in comprehensive AI governance or accept substantial personal risk. The cost of establishing proper governance pales in comparison to the potential personal and organisational consequences of failure.

The Speed of Change

AI adoption rates across all sectors are currently outpacing the velocity of previous technological disruptions, including internet adoption, Cloud migrations, and the evolution of user interaction systems. The government’s objective is for automation and AI to enable improved and faster government services while balancing safety and security5. Nonprofits see AI-driven operational advantages among peer organisations6. Academic institutions confront student and research demands for AI integration.

Vendor hype about, and media buy-in to, an imperative for rapid adoption creates a dangerous dynamic where organisations feel pressured to deploy AI systems before establishing proper governance frameworks. The organisations that resist this pressure and invest in governance first set clear boundaries and threshold conditions for AI initiatives, as well as the required returns, which establish a solid platform for building sustainable competitive advantages that compound over time.

Early adopters with robust governance are seeing transformational improvements in operational efficiency, decision-making speed, stakeholder satisfaction, and cost management. Recent studies increasingly underscore that transformational results emerge within environments where strong governance enables controlled, ethical, and resilient deployment of agentic AI. Governance is repeatedly portrayed as a fundamental enabler7, not an optional add-on, for capturing value from agentic AI technology safely and sustainably.

Meanwhile, organisations with weak governance face mounting incidents, regulatory scrutiny, stakeholder complaints, and risk reputational damage8 that undermines their core mission.

The Human Element

Contrary to popular narratives about AI replacing human workers, the most successful implementations focus on augmenting human capabilities rather than substituting for human judgment9. The organisations achieving the greatest advantages treat AI as sophisticated tools that enhance human decision-making rather than autonomous systems that operate independently of human oversight10.

This human-centric approach requires fundamental changes in leadership philosophy. Leaders must transition from controlling processes to influencing outcomes, from reactive problem-solving to predictive risk management, and from hierarchical command structures to collaborative partnerships between humans and AI systems.

The most critical observation is that successful AI deployment depends more on human factors such as leadership quality, governance systems, cultural adaptation, and stakeholder communication, rather than on technical sophistication. The technology has become commoditised; the governance and leadership capabilities that harness it responsibly remain rare and valuable.

Next Steps

Immediate Actions for All Leaders

Leaders must inform themselves, beginning with a comprehensive audit of current AI usage within their organisation. Many leaders discover there are already agentic AI systems operating without proper governance oversight. This audit should identify every AI system, assess its risk levels, and document its decision-making authorities and oversight mechanisms.

Simultaneously, establish executive accountability by designating a senior leader as responsible for AI governance. This cannot be delegated to IT departments or external consultants. It requires C-suite or equivalent-level ownership. This executive must have the authority to approve AI deployments, establish governance policies, and report directly to the organisation’s governing board on AI performance and risks.

To ensure transparency, public service organisations should conduct public consultations before deploying high-impact AI systems.

Establish an AI governance committee with cross-functional expertise, comprising representatives from legal, risk management, operations, and technical leadership. This committee should meet frequently, with authority to approve or reject AI projects based on risk assessment criteria. The committee charter must specify budget approval thresholds, risk tolerance parameters, and escalation procedures to be submitted to the organisation’s governing board.

Practical Governance Framework Development11

As a matter of principle, AI governance is not a one-time event but a continuous process of monitoring, adapting, and updating. Organisations’ internal governance must also evolve as AI technology changes.

Include a cadence or a set of triggers for review and update of AI governance, e. g., deployment of new AI systems, change in organisational goals, new regulatory requirements, judicial precedent, etc.

Develop comprehensive AI governance policies that specify approval processes for different risk categories of AI systems:

- High-Risk Systems: These systems pose a significant risk of harm and should require approval from the governing body.

- Medium-Risk Systems: Support human decision-making and require executive approval.

- Low-Risk Systems: Perform routine tasks under human supervision and should proceed with operational approval.

Implement technical controls, including hard boundaries that prevent AI systems from exceeding defined parameters, immutable and complete decision logging for audit purposes, human override capabilities for immediate intervention, and transparent explainability features that enable understanding of AI decision-making processes.

Establish incident response procedures specifically designed for AI failures. These procedures should include immediate containment protocols, stakeholder notification requirements, investigation methodologies, remediation strategies, and post-incident improvement processes. Regular testing of these procedures through tabletop exercises ensures readiness in the event of real incidents.

Workforce Transformation

Launch comprehensive training programmes tailored to different organisational levels. Governing body members, executive leadership, and senior management require an understanding of personal liability risks, governance oversight responsibilities, and strategic implications of AI deployment. Management needs operational oversight skills, performance monitoring capabilities, and change management expertise. Operational staff must develop AI collaboration skills, quality assurance techniques, and safety procedures.

Create human-in-command roles for every AI system with clear authority to monitor performance, intervene when necessary, and maintain accountability for system outcomes. These individuals need both technical understanding and organisational authority to ensure effective oversight.

Develop change management strategies that engage employees as partners in AI transformation rather than victims of technological displacement. This includes involving staff in AI system design, providing retraining opportunities, creating new roles that leverage uniquely human skills, and recognising successful human-AI collaboration.

Vendor and Partnership Management

Establish rigorous due diligence processes for AI vendors, including financial stability assessments, technical architecture reviews, security control evaluations, and regulatory compliance verifications. Contract terms must include robust control and performance regimes with substantive financial penalties, audit rights for AI decision processes, data ownership guarantees, liability protection, and termination assistance. Ensure suitable limitations are in place regarding the vendor’s ability to unilaterally vary terms. This includes variations made through changes to Terms of Use, user click-through notices, or website publication.

Implement ongoing vendor oversight, including monthly performance reviews, quarterly technical assessments, annual compliance audits, regular contract optimisation, and continuous benchmarking against market standards. This ensures that vendor relationships support, rather than undermine, organisational AI governance objectives.

Stakeholder Engagement

Develop transparent communication strategies that proactively disclose AI usage to stakeholders, explain decision-making processes in plain language, provide human escalation options for AI-driven decisions, and offer regular performance reports. This transparency fosters trust and legitimacy, which are essential for long-term success.

Develop stakeholder feedback mechanisms that facilitate the continuous improvement of AI systems, informed by user experience and community needs. This participatory approach ensures AI deployment serves stakeholder interests rather than purely organisational efficiency goals.

Looking Forward to the Horizon

The Transformation of Leadership

The emergence of agentic AI signals a fundamental evolution in leadership requirements. Leadership for the future must develop systems thinking capabilities that understand complex interactions between AI systems, human behaviour, and organisational outcomes. They need risk intelligence that balances innovation speed with governance requirements while maintaining stakeholder trust.

Technical literacy becomes essential, not to manage AI systems directly, but to make informed strategic decisions about AI capabilities and limitations. Leaders must possess ethical decision-making skills to navigate complex issues around AI bias, privacy, and human autonomy. Most importantly, they need stakeholder communication abilities to explain AI use and safeguards in accessible terms.

Governing bodies for the future require different compositions and competencies. Consider adding members with technology leadership experience in AI-driven sectors, regulatory expertise in data and AI governance, crisis management experience with complex technical systems, and stakeholder communication skills for emerging technology issues.

The Evolution of Organisational Design

Successful organisations will restructure around human-AI partnerships rather than traditional hierarchical models. This requires new roles that leverage uniquely human capabilities, such as creative problem-solving, ethical reasoning, stakeholder relationship management, and strategic thinking, while AI systems handle data processing, pattern recognition, routine decision-making, and operational coordination.

Organisational cultures must evolve to embrace continuous learning as AI systems constantly adapt and improve. This cultural transformation requires leadership that models curiosity, resilience, and ethical reasoning while maintaining accountability standards that preserve human dignity and organisational mission.

The Imperative for Sector Leadership

AIGovX creates sustainable competitive advantages. By investing in robust governance frameworks, transparent stakeholder communication, and the ethical deployment of AI, they build trust and capabilities that competitors cannot easily replicate.

AIGovX enables faster innovation as stakeholders develop confidence in organisational AI capabilities. It reduces regulatory risk through proactive compliance and relationship building. It attracts top talent who prefer working with organisations that demonstrate responsible technology leadership.

The organisations that emerge as AIGovX leaders will be those that publicly commit to responsible AI principles, share governance best practices with industry peers, participate actively in regulatory development, and demonstrate measurable improvements in stakeholder outcomes through AI deployment.

The Global Transformation

Agentic AI represents the beginning of a broader transformation where automated systems become integral to all organisational operations. Leaders who master the governance challenges of early AI deployment position their organisations to leverage increasingly sophisticated autonomous capabilities while maintaining human control and stakeholder trust.

Ethical leaders leverage AI to benefit organisations and communities, prioritising human well-being and dignity. AI must serve human interests, not subjugate them, requiring human oversight to protect rights and prevent misuse. AI transformation extends beyond individual organisations to reshape entire sectors and societies12 e. g.:

- AI-driven diagnostics, personalised treatment plans, and drug discovery have a significant impact on healthcare outcomes globally. AI algorithms analyse medical imaging at scale, improving disease detection and patient outcomes across populations. Hospitals and research centres adopting AI improve efficiency, reduce costs, and accelerate innovation, affecting health systems and public health worldwide.

- AI influences public sector transformation, including predictive policing, traffic management, urban planning, and public health monitoring. Governments globally integrate AI into services to improve efficiency, resource management, and citizen engagement, reshaping how societies are governed and services are delivered13.

Leaders who act decisively today to establish responsible AI governance will contribute positively to defining the long-term standards that govern autonomous systems.

Footnotes

- In this context AIGovX is the actions that close the gap between what is, and what could be.

- The stepping stone principle is a legal concept where a director’s liability for a breach of duty is established in two stages. First, the company’s breach of law is proven, which then serves as a stepping stone to show that the director failed their duty of care by allowing the company to be exposed to a foreseeable risk of harm. A duty of care is also a fundamental feature of Australian public sector governance, applying to a broad range of entities including Commonwealth officials and state public entity directors, and enforced through specific legislation or codes of conduct across different jurisdictions.

- For example, drawing on existing legal precedents and authoritative guidance, a leader’s duty of care and diligence under the Corporations Act 2001 (Cth) extends to a company’s use of AI. Legal and regulatory bodies, including the Australian Securities and Investments Commission (ASIC), have reinforced this by showing that a failure to manage foreseeable technological risks, such as those related to cyber security in the landmark case of ASIC v RI Advice Group Ltd, can constitute a breach of duty.

- ‘ASIC warns of governance gap in AI adoption’, Australian Institute of Company Directors, 2024.

- ‘Automation and Artificial Intelligence Strategy 2025–27’, Services Australia, 2025.

- Independent industry reviews and scholarly research studies offer authoritative evidence that nonprofits observe and report tangible, AI-driven operational advantages among peer organisations, spanning efficiency, productivity, donor engagement, and mission delivery. Source: ‘2025 AI Benchmark Report: How Artificial Intelligence Is Changing the Nonprofit Sector; Empowering Impact: The Perils and Promise of AI in the Nonprofit Sector’, NonProfit Pro, 2025.

- ‘Seizing the Agentic AI Advantage’, McKinsey & Co., 2025; ‘Adoption of AI and Agentic Systems: Value, Challenges, and Pathways’, Berkeley Center for Information Technology, 2025; ’Artificial Intelligence: What Directors Need to Know.’, PwC, 2023.

- The Commonwealth Bank of Australia faced a reputational setback in August 2025 after a failed AI integration. A plan to replace staff with a voice bot was reversed, and an apology issued following union pressure and public scrutiny.

- ‘The state of AI: How organisations are rewiring to capture value’, McKinsey & Co., 2025: This McKinsey survey from March 2025 highlights that a CEO’s oversight of AI governance and the redesign of workflows are the two most correlated attributes with achieving a positive bottom-line impact from AI.

- ‘How BCG Is Revolutionising Consulting With AI: A Case Study’, Forbes, 2024: BCG’s internal use of AI tools like Deckster and GENE demonstrates how AI can liberate 70 per cent of employee hours, which are then reinvested into higher-value client work rather than resulting in headcount reduction.

- Conceptual guidance can be found in the Australian Securities and Investment Corporation’s ‘Beware the gap: Governance arrangements in the face of AI innovation’, ASIC, 2024, and the Australian Institute of Company Directors’ ‘A Director’s Guide to AI Governance’, AICD and UTS, 2024. Other leading technical frameworks include the US National Institute of Standards and Technology’s ‘Artificial Intelligence Risk Management Framework’, NIST, 2023, which provides a checklist across core functions; the Australian Signals Directorate’s broader cyber security frameworks including ‘Information security manual’, ASD, 2025, ‘Guidelines for secure AI system development’, ASD, 2023, and ‘Engaging with artificial intelligence’, ASD, 2024; and the NSW government’s ‘Artificial Intelligence Assessment Framework’, NSW Government, 2024.

- ‘AI revolutionising industries worldwide: A comprehensive overview of its diverse applications’, ScienceDirect, 2024.

- ‘How artificial intelligence is transforming the world’, Brookings, 2018.